SIFT (Scale Invariant Feature Transform)

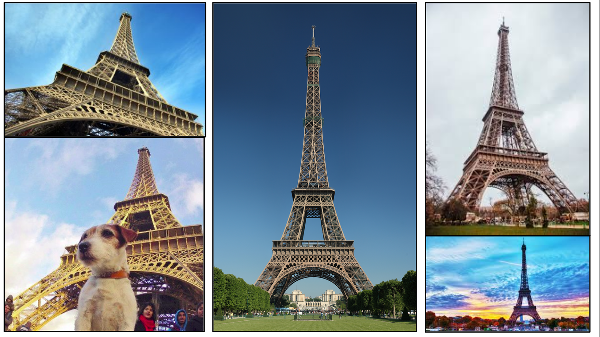

detects distinctive keypoints(local features) or features in an image that are robust to changes in scale, rotation, and affine transformations

We naturally understand that the scale or angle of the image may change, but the object remains the same.

BUT machines have an almighty struggle with the same idea. It’s a challenge for them to identify the object in an image if we change the angle or the scale, etc.

. . . . . . . The major advantage of SIFT features is that they are not affected by the size or orientation of the image.

다음과 같은 단계로 진행됩니다!

Constructing a Scale Space

: To make sure that features are scale-independent

- Scale space: collection of images having different scales, generated from a single image

Keypoint Localisation

: Identifying the suitable features or keypoints

- to find the local maxima and minima for the images

Orientation Assignment

: Ensure the keypoints are rotation invariant

Keypoint Descriptor

: Assign a unique fingerprint to each keypoint

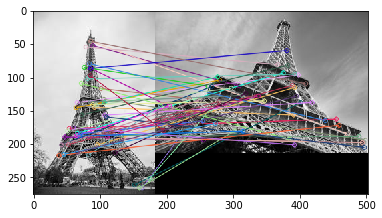

Feature Matching

- using BFmatcher (brute force matcher)

을 요약한 것입니다!

SURF (Speeded Up Robust Feature)

Fast and robust algorithm for local, similarity invariant representation and comparison of images

Fast computation of operators using box filters → real-time applications enabled

2 steps. . .

1. Feature Extraction

Very basic Hessian matrix approximation is used for interest point detection

Integral Images

quick and effective way of calculating the sum of pixel values in a given image

→ fast computation of box type convolution filters

Hessian matrix-based interest points

uses the determinant of the Hessian matrix for selecting the location and the scale

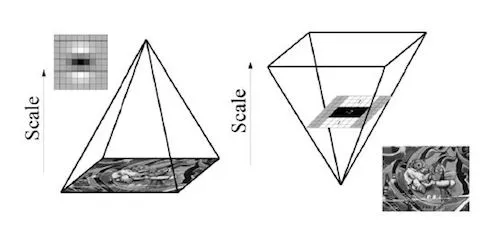

Scale-space representation

Scale spaces are usually implemented as image pyramids. . .

Instead of iteratively reducing the image size (left),

the use of integral images allows the up-scaling of the filter at constant cost (right).

2. Feature Description

Orientation Assignment

Fixing a reproducible orientation based on information from a circular region around the keypoint, in order to be invariant to rotation

Descriptor Components

Constructs a square region aligned to the selected orientation and extract the SURF descriptor from it.

https://medium.com/data-breach/introduction-to-surf-speeded-up-robust-features-c7396d6e7c4e

를 요약하여 작성하였습니다!

ORB (Oriented FAST and Rotated BRIEF)

ORB의 feature detection 성능은 SIFT와 유사하며 SURF보다 우수함, 속도는 SIFT보다 훨씬 빠름!

3 steps. . .

1. FAST(Features from Accelerated and Segments Test): keypoint detector

→ locates determining edges

Process:

1) pixel p가 있음

2) p와, p를 둘러싼 원 안의 16개 pixel들의 brightness를 비교

3) 원 안의 16개 pixel들은 다음과 같이 3 classes로 분류됨: p보다 밝음 / p보다 어두움 / p와 유사함

4) 만약 9 pixels 이상이 p보다 밝음 / p보다 어두움 이면, p는 keypoint로 선택됨!

→ able to locate determining edges! BUT there are NO orientation component and multiscale features yet

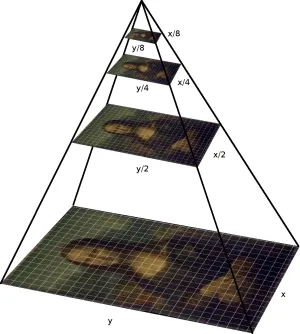

multiscale image pyramid → partial scale invariant

※ image pyramid: a multiscale representation of a single image

- consists of sequences of images, all of which are versions of the image at different resolutions

우선 pyramid를 만든 다음, FAST algorithm 사용 → effectively locates keypoints at a different scale

→ partial scale invariance!

2. Intensity Centroid → orientation assignment, rotation invariance

ORB now assigns an orientation to each keypoint like left or right facing

- depending on how the levels of intensity change around that keypoint

. . . . .For detecting intensity change, intensity centroid is used!

: The intensity centroid assumes that a corner’s intensity is offset from its center, and this vector may be used to impute an orientation

Then, rotates it to a canonical rotation and then compute the descriptor

→ rotation invariance!

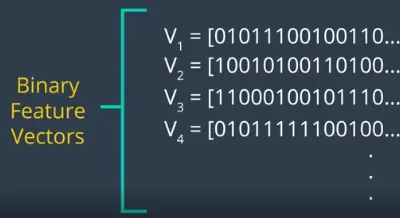

3. BRIEF(Binary robust independent elementary feature): descriptor

→ binary feature vector

Process:

1) Smooths image using a Gaussian kernel

2) Selects a random pair of pixels in a patch(defined neighborhood around a keypoint found by FAST)

- The first pixel in the random pair

: drawn from a Gaussian distribution centered around the keypoint with a stranded deviation or spread of sigma

- The second pixel in the random pair

: drawn from a Gaussian distribution centered around the first pixel with a standard deviation or spread of sigma by two

3) If the first pixel is brighter than the second → assigns the value of 1 to corresponding bit else 0

→ converted into binary feature vector! → together, represents the keypoint!

4) For a N-bit vector, Repeats this process for N times for a keypoint

- the vector is created for each keypoint in an image

However, BRIEF isn’t invariant to rotation, so ORB uses rBRIEF(Rotationaware BRIEF)

https://medium.com/data-breach/introduction-to-orb-oriented-fast-and-rotated-brief-4220e8ec40cf

를 요약하여 작성하였습니다!

'컴퓨터과학 > 인공지능' 카테고리의 다른 글

| [TF-IDF(Term Frequency-Inverse Document Frequency)] 계산 과정, 강점 (0) | 2024.05.12 |

|---|---|

| [Naive Bayes Algorithm] 원리, 종류, 주의사항 (0) | 2024.05.11 |

| 작성중 (0) | 2023.07.30 |

| 논문 스터디 (0530) (0) | 2023.05.13 |

| [AI] AI의 기초 (0) | 2023.05.05 |